Occasionally we run across sentiments like this:

[R]ecently I see [unit tests] as things that do nothing but detract value. The only time the tests ever break is when we develop a new feature, and the tests need to be updated to reflect it. [...] If unit tests ever fail, it's because I'm simply working on a new feature. Never, ever, in my career has a failing unit test helped me understand that my new code is probably bad and that I shouldn't do it. [...] Unit tests are supposed to be guard rails against new, bad code going out. But they only ever guard against new, good code going out, so to speak.

Let's start by acknowledging that this situation is a reality for many developers and teams. In the best case, unit tests take time to write and maintain. What value is generated in that time?

As Specs

Unit tests over a class (module, package, etc.) are how we ensure every method (function, routine, etc.) in it complies with the specification, and if there is no spec, the unit tests become the de facto spec. The unit tests should therefore demonstrate how to use the class for newcomers.

If that makes it sound like I'm expecting tests to behave as documentation, it's because I am: Human-readable comments rot, but CI failures are forever, or at least final. Our forebears created a new, better kind of documentation that breaks your merge when it changes the rules. Let us use it in their honor. Normalize learning from unit tests, and their value becomes apparent.

With respect to the OP's point, every feature branch that breaks a unit test implies a potential broken contract somewhere else in the codebase, because it means your assumptions about the class in question have changed. When the tests pass after such a change, it just means you didn't break any condition that you're currently testing for. The question then becomes, just how good are your integration tests, really?

As Verified Assumptions

OP is concerned with failures on commits to feature branches, or merges to trunks like main. Test success is obviously critical at those points, but that doesn't entirely capture the value of the tests.

Imagine a bug ticket about some unexpected behavior in production: You have a background job that fails every so often because something deep in the call stack isn't receiving a value it thinks it should. All your tests are passing—you wouldn't have merged otherwise—but all that means is that you're not testing for this bug.

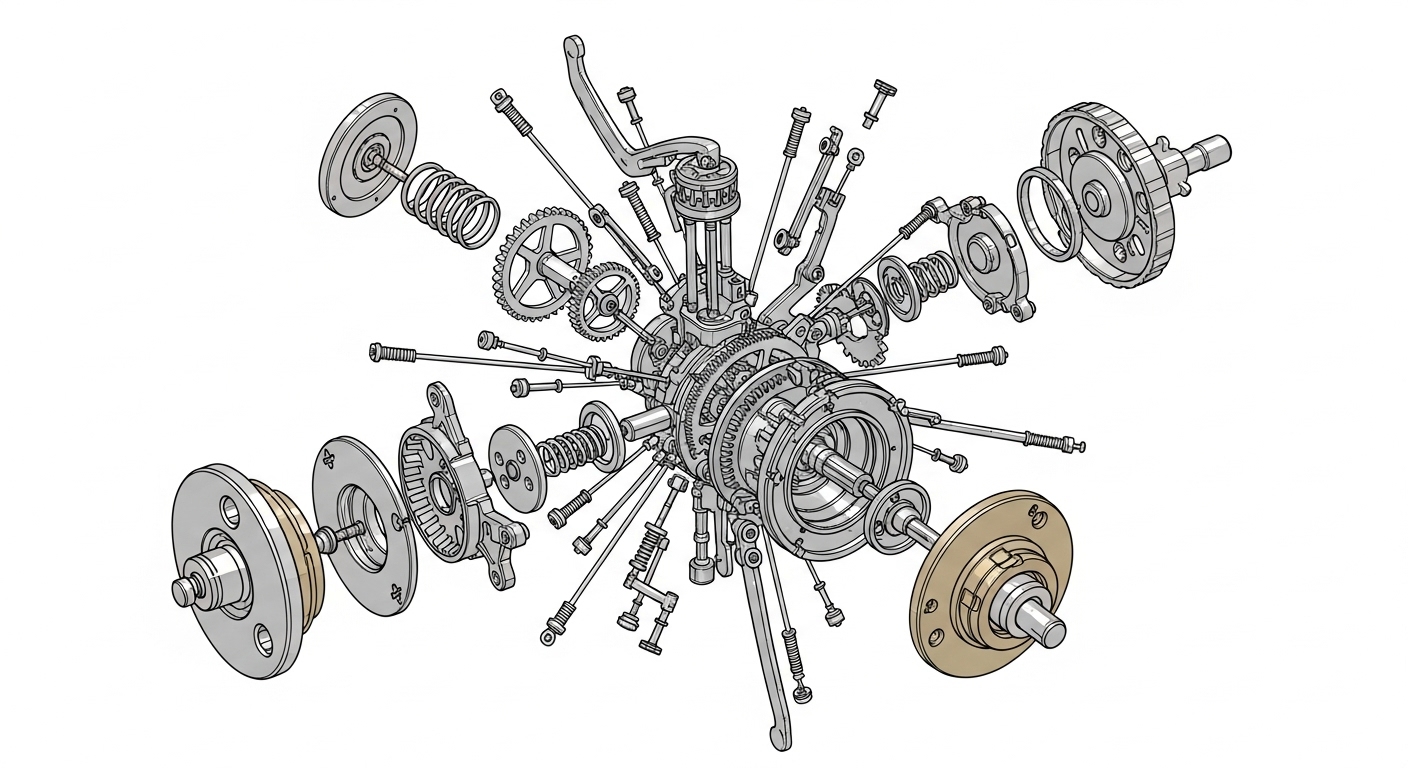

Integration tests will show you that certain operations, user stories, and so on work under normal assumptions, and hopefully that they fail as they should on predictable errors, but when integration tests break, they don't generally tell you why they broke. They can't, because as integration tests, the things they're integrating are the very failure points that you need to tease apart. Unit tests verify your assumptions about the behavior of those failure points. If you have comprehensive unit testing that's passing when your application is failing, you have many of your assumptions verified in advance. If you don't have unit testing, you have to break down each of those failure points to be sure.

Unit tests are visibility into the causation of bugs, not just their presence. And they provide value not just in failing when they're supposed to on commits and merges, but passing when they're supposed to, when something mysterious has broken.

As Cheap Tests of Deep Permutations

A recent contract featured a complicated access control system. Even trivial operations had, in principle, a vast array of scenarios to test against. Users could be system admins, support staff, or low-level support for every tenant in the application; every tenant in the application supported another dozen or so roles; users may have roles directly under their tenant organization, but some roles also require direct assignment to nested resources under the tenant organization; users may belong to multiple tenant organizations, and so on.

Testing every one of these scenarios in request or system tests would be unmanageable. Every scenario implies seeded records and an expensive HTTP request, and every controller implies a whole bunch of boilerplate, so resource consumption grows geometrically. On this particular project, the client had already requested we streamline our system tests for performance and maintainability.

Thankfully (we were using Pundit) the actual access in the application was mediated by action-specific methods (new?, edit?, index?, etc.) that in turn called boolean methods on a user object like user.system_admin? or user.technician_for?(some_resource). The complicated (and expensive) database structure—users, roles, organizations, organization types, and so on—was in the end just a way of making those boolean methods return true or false. By selectively stubbing them, we were able to create unit tests for the higher-level permissions like new? and edit? that verify they behave as they should under all permutations of the underlying user access.

We created a group of shared examples called like so:

RSpec.describe WidgetPolicy, type: :policy do

subject { described_class }

let(:pundit_user) { build(:user, ...) }

let(:pundit_resource) { build(:widget, ...) }

context "permissions" do

permissions :show? do

it_behaves_like "it permits the given roles", [

:system_admin,

:owner,

:technician

]

end

end

end

Each of the roles will correspond to a boolean method on the pundit_user object (#system_admin? , #owner?, #technician?). The shared examples:

- Stub all boolean methods to return false, and assert the permission is denied:

it { is_expected.to_not permit(pundit_user, pundit_resource) } - Iterate over the given method, stubbing each to return true, (but no others) and asserting the permission is granted:

it { is_expected.to permit(pundit_user, pundit_resource) }

Any relevant application scope (the widget is active, the blog post is unpublished, etc.) can be simulated in context blocks, and the shared examples can be called again, allowing you to quickly and consistently test the actual policy logic without duplicating testing of the underlying boilerplate (which should have its own unit tests, run once and with a fake policy if necessary). Our system and request specs were able to stay slim enough to be usable, while we still have comprehensive coverage of our access control system. (If it seems like the database records are the relevant subjects, rather than the boolean methods, ask yourself this: What happens when we put a cache between the database and the boolean methods? When you call a policy, it should be an implementation detail whether the result is served out of the database or the cache; in a unit test for a policy we’re interested in the return value of the policy method, not what code path it took to get there.)

These Are Things Integration Tests Don't Give You

Put another way, integration tests generally:

- don’t explain how to use their subjects, or what those subjects guarantee

- don’t explain how a feature broke, only that it did

- are often too expensive to comprehensively test many-dimensional problems

What Should(n't) I Unit Test?

Getting back to reality: Does that mean you should write a unit test every time you define a class, module, etc.? Probably not. I have a theory that the Reddit OP was thinking of unit tests descriptively, in that they say what a class does; from that perspective, I see how it looks like they only matter when you're changing the class in question. If you look at unit tests normatively though, i.e., describing what the class is supposed to do, it becomes clear that the unit test is ensuring that whatever changes you just made didn't upset the expected behavior elsewhere. Automatically fixing the unit test to match the new behavior (as described by OP) might work in simple cases, but in complex applications, it amounts to changing a specification by fiat and at will.

The argument above only applies to public interfaces though, so it suggests a natural limit to unit testing: anything you'd call an "interface" counts, and anything you'd call "implementation" doesn't. That applies to private methods within a class, but it also applies to "private classes,” hidden from outside callers, if your language supports such a thing.

Consider Phoenix's concept of "contexts":

Contexts are dedicated modules that expose and group related functionality. [...] By giving modules that expose and group related functionality the name contexts, we help developers identify these patterns and talk about them.

Contexts get their own namespace and directory on the filesystem. The functions exposed by the context module constitute the public API of that system. This is a natural module to unit test.

The other thing to note about this definition is that it's pragmatic, rather than descriptive: You turn a module into a "context" by deciding that it provides a contract to outside callers, not by doing something in the code. It's a term of art. This means that if you sense a lower-level module is really providing a service, that's grounds to test it and ask questions later. Don't feel like unit testing one lower-level module implies the technical debt of unit testing the rest of them someday for parity. (Welcome to the inside of my head!) In the case of our hypothetical bug ticket above, I might give it coverage by adding a unit test to represent the condition that arose in production, in the tests for the class where the bug was uncovered.

The Birds and Bees of TDD

My first reading of that Reddit thread was (and is) technical, but I think there’s a non-technical lesson to draw as well. Going back to the top:

I've been working at a large tech company for over 4 years. While that's not the longest career, it's been long enough for me to write and maintain my fair share of unit tests. In fact, I used to be the unit test guy. I drank the kool-aid about how important they were; how they speed up developer output; how TDD is a powerful tool... I even won an award once for my contributions to the monolith's unit tests.

In other words, OP put their faith in best practices—in our shared craft—but it sounds like they weren’t rewarded with an in-depth understanding of the value of their work. The first four years of a development career are where a lot of people move from copying prior art to generating novel solutions. For curious people, this moment is crucial for morale, and as AI tools take over copypasta programming tasks, understanding why things work (or break) will become more of every developer’s portfolio, not just the senior ones. You want a culture of curiosity on your team, because you want to retain curious people, and because you want them to ship good (and well-tested) code. Talk to your true believers about unit tests before they learn about them from Reddit!